The moon doesn’t have sufficient gravity to maintain an atmosphere. Any smog will float out into space, like helium on earth.

The moon doesn’t have sufficient gravity to maintain an atmosphere. Any smog will float out into space, like helium on earth.

Did you want to add anything to the discussion or just make a snarky comment? I looked through the paper linked in the article and didn’t see a capacity listed.

Our approach directs an alternative Li2S deposition pathway to the commonly reported lateral growth and 3D thickening growth mode, ameliorating the electrode passivation. Therefore, a Li–S cell capable of charging/discharging at 5C (12 min) while maintaining excellent cycling stability (82% capacity retention) for 1000 cycles is demonstrated. Even under high S loading (8.3 mg cm–2) and low electrolyte/sulfur ratio (3.8 mL mg–1), the sulfur cathode still delivers a high areal capacity of >7 mAh cm–2 for 80 cycles.

A 5C charging rate is great, but it’s pretty useless if the battery is too small to be practical.

Gotta put my chemistry education to good use somehow, certainly not using it in the IT career I ended up getting in.

The premise of the test is to determine if machines can think. The opening line of Turing’s paper is:

I propose to consider the question, ‘Can machines think?’

I believe the Chinese room argument demonstrates that the Turing test is not valid for determining if a machine has intelligence. The human in the Chinese room experiment is not thinking to generate their replies, they’re just following instructions - just like the computer. There is no comprehension of what’s being said.

The Chinese room experiment only demonstrates how the Turing test isn’t valid. It’s got nothing to do with LLMs.

I would be curious about that significant body of research though, if you’ve got a link to some papers.

I think the Chinese room argument published in 1980 gives a pretty convincing reason why the Turing test doesn’t demonstrate intelligence.

The thought experiment starts by placing a computer that can perfectly converse in Chinese in one room, and a human that only knows English in another, with a door separating them. Chinese characters are written and placed on a piece of paper underneath the door, and the computer can reply fluently, slipping the reply underneath the door. The human is then given English instructions which replicate the instructions and function of the computer program to converse in Chinese. The human follows the instructions and the two rooms can perfectly communicate in Chinese, but the human still does not actually understand the characters, merely following instructions to converse. Searle states that both the computer and human are doing identical tasks, following instructions without truly understanding or “thinking”.

Searle asserts that there is no essential difference between the roles of the computer and the human in the experiment. Each simply follows a program, step-by-step, producing behavior that makes them appear to understand. However, the human would not be able to understand the conversation. Therefore, he argues, it follows that the computer would not be able to understand the conversation either.

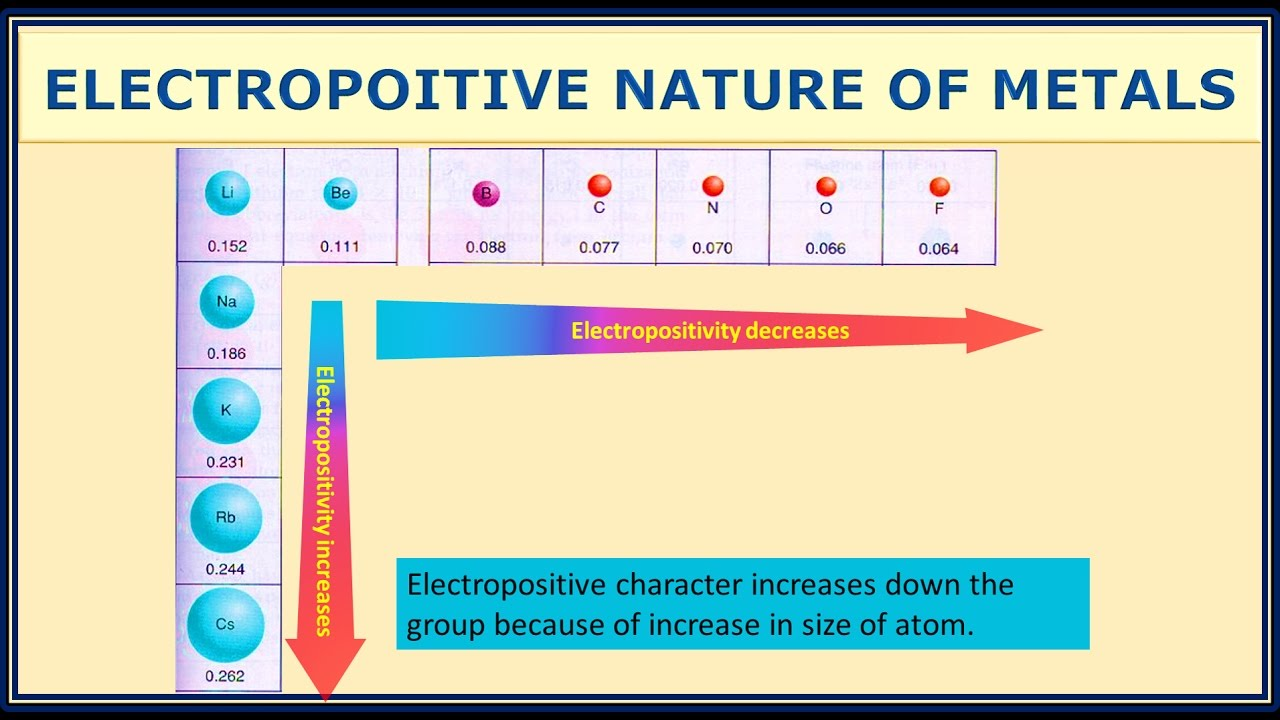

It’s the difference in electronegativity that makes the battery. That’s why you see lithium and oxygen a lot; lithium doesn’t want electrons, oxygen does want them. Sodium and potassium are very close in electronegativity so the salty banana battery wouldn’t be good.

I’m waiting for the cesium / fluorine battery, should theoretically be awesome. Or extremely explosive

That’s because lithium is in the most electropositive group of elements and sodium/potassium are too reactive for current technology. Theoretically I think Na and K based batteries should perform better as they’re even more electropositive than Li.

(Forgive the spelling error in the picture but it was the simplest one I could find quickly)

“Fully charged in 12 minutes” is meaningless without a capacity.

Polymerized cellulose is by definition a biobased polymer, this isn’t anything new. The study doesn’t make any claims that polymerized cellulose is harmful. Calling them “plant fibers” is incorrect as they aren’t derived directly from a plant, like say, cotton. These are manufactured using cellulose.

This is not silly; the study is not to determine if these are harmful or not, just what’s released from boiling a teabag.

I’m not knowledgeable in this area of research nor am I about to spend an hour going over the paper to write this comment, but collecting data on seemingly mundane things is important too.

deleted by creator

Just use a thumb drive

I’m not pretending to understand how homomorphic encryption works or how it fits into this system, but here’s something from the article.

With some server optimization metadata and the help of Apple’s private nearest neighbor search (PNNS), the relevant Apple server shard receives a homomorphically-encrypted embedding from the device, and performs the aforementioned encrypted computations on that data to find a landmark match from a database and return the result to the client device without providing identifying information to Apple nor its OHTTP partner Cloudflare.

There’s a more technical write up here. It appears the final match is happening on device, not on the server.

The client decrypts the reply to its PNNS query, which may contain multiple candidate landmarks. A specialized, lightweight on-device reranking model then predicts the best candidate by using high-level multimodal feature descriptors, including visual similarity scores; locally stored geo-signals; popularity; and index coverage of landmarks (to debias candidate overweighting). When the model has identified the match, the photo’s local metadata is updated with the landmark label, and the user can easily find the photo when searching their device for the landmark’s name.

It’s not data harvesting if it works as claimed. The data is sent encrypted and not decrypted by the remote system performing the analysis.

From the link:

Put simply: You take a photo; your Mac or iThing locally outlines what it thinks is a landmark or place of interest in the snap; it homomorphically encrypts a representation of that portion of the image in a way that can be analyzed without being decrypted; it sends the encrypted data to a remote server to do that analysis, so that the landmark can be identified from a big database of places; and it receives the suggested location again in encrypted form that it alone can decipher.

If it all works as claimed, and there are no side-channels or other leaks, Apple can’t see what’s in your photos, neither the image data nor the looked-up label.

.0000000000000003 atm or 0.3 nanopascals of atmosphere.

I feel like saying the moon technically has an atmosphere is like saying an astronaut has an atmosphere if they farted in space sans spacesuit because some gas lingers around them.